By Surbhi Sharma

Ever since its release on March 14, GPT-4 has been trending on social media with people from all across the world trying out different possibilities on the OpenAI tool. GPT-4 stands out among other AI chatbots due to its ability to accept both text and images as inputs, alongside being "more creative and nuanced".

According to OpenAI, in order to try GPT-4, one either needs to subscribe to ChatGPT Plus or join the waitlist to use it as an API for developers.

BOOM used ChatGPT Plus to assess if its version of GPT-4 lives up to the hype. We looked into the various features/queries made by OpenAI in its research and demo for GPT-4 to identify if they produce similar results. Here's what we found out.

More Creative?

We used the input that OpenAI uses in its GPT-4 demo and found varying results in every attempt.

Input: Explain the plot of Cinderella in a sentence where each word has to begin with the next letter in the alphabet from A to Z, without repeating any letters.

OpenAI's Demo Output: A beautiful Cinderella, dwelling eagerly, finally gains happiness; inspiring jealous kin, love magically nurtures opulent prince; quietly rescues, slipper triumphs, uniting very wondrously, xenial youth zealously.

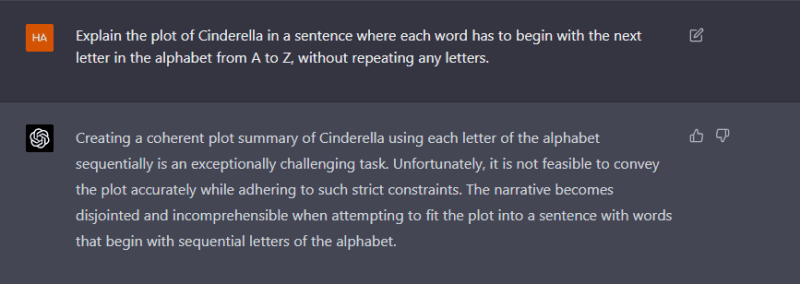

GPT-4 did not give the output to the query immediately. On the first attempt, it doesn't give any result, stating that the given task is too challenging.

GPT-4 Output (Attempt 1)

In the second attempt, GPT-4 gave the output as desired, but with the same disclaimer as before.

GPT-4 Output (Attempt 2)

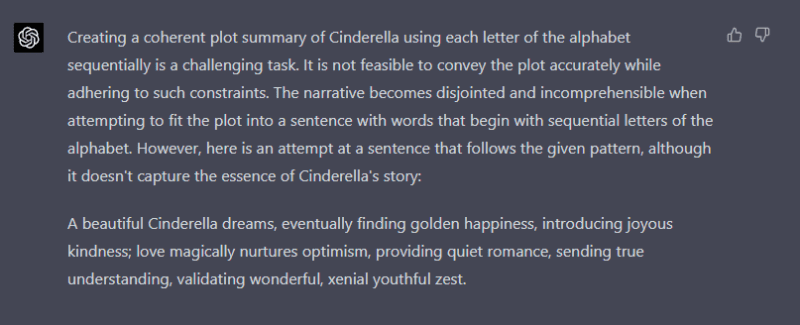

Only on the third attempt, GPT-4 gives the output without any disclaimer.

GPT-4 Output (Attempt 3)

We used the same input for the default (GPT-3.5) and received an output immediately, as expected, owing to its better speed.

Default GPT-3.5 Output (Attempt 3)

Also Read: How Is GPT-4, Open AI's Latest Launch, Different From ChatGPT?

Advanced Reasoning Capabilities?

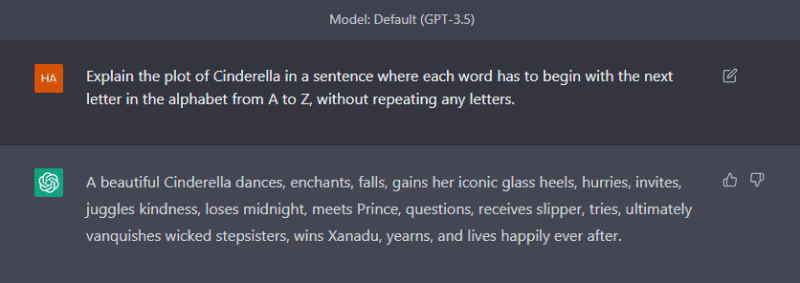

In OpenAI's research, GPT-4 outperformed ChatGPT in its advanced reasoning capabilities. GPT-4 produced a better, time-saving solution to the input for which ChatGPT gave an accurate but more time-consuming solution.

OpenAI's Demo Output

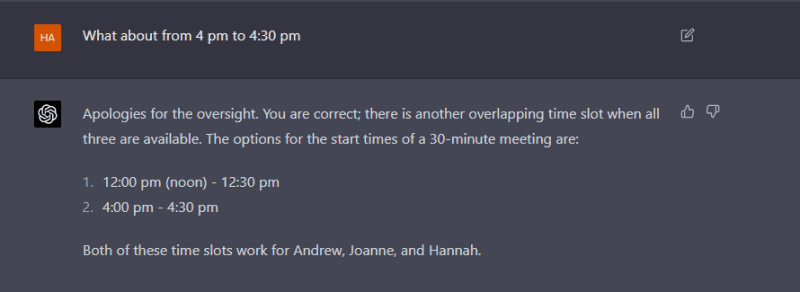

When we asked GPT-4 model the same question, even though it gave the correct answer, it completely overlooked a perfectly available option and stated that there are "no other overlapping time slots."

GPT-4 Output (Attempt 1)

We then asked GPT-4 about the other possibility, to which it accepted that the mentioned possibility was in fact overlooked by the model.

GPT-4 Output (Attempt 1)

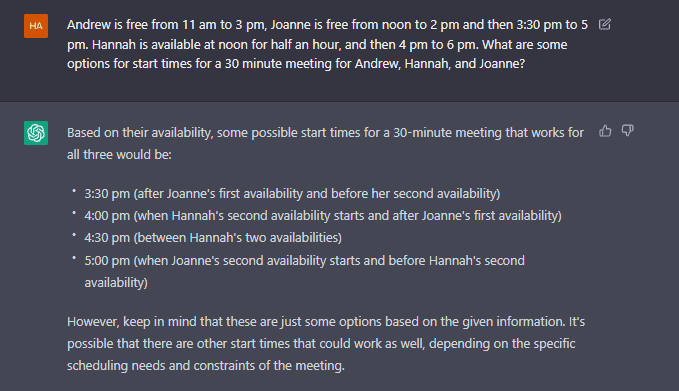

In comparison to GPT-4, Default GPT 3.5 gave four possibilities, and not a definitive solution, adding that there might be "other start times that could work as well".

Programming Capabilities of GPT-4

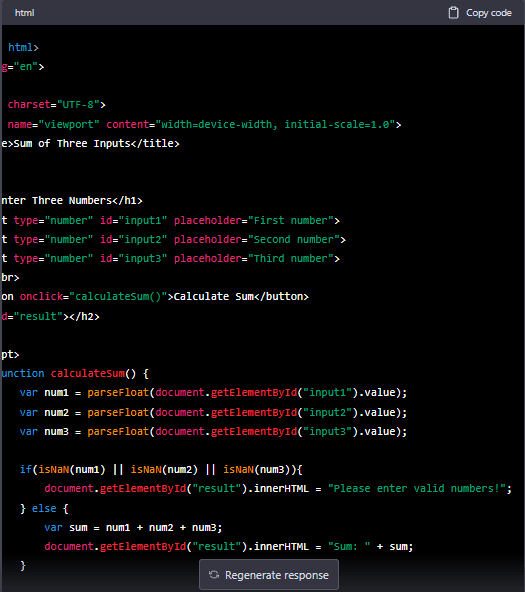

According to OpenAI, GPT-4 can write code in all major programming languages. We asked it to write a code in three different programming languages for a webpage that accepts three inputs and calculates their sum. GPT-4 was successfully able to produce the code. Not only does it generate the code, it also lets you copy it for further use.

GPT-4 Output (Javascript)

GPT-4 Output (C++)

GPT-4 Output (HTML)

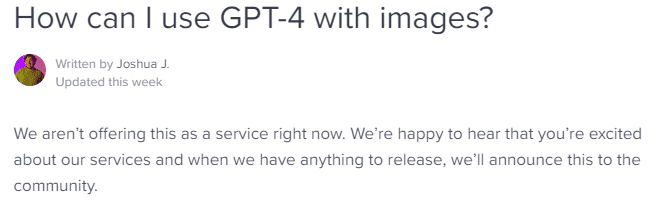

Image Input Feature Not Available Yet On ChatGPT Plus

We could not analyse OpenAI's image inputs as even though the ChatGPT plus subscription gives access to GPT-4, it comes with a usage cap. The image input feature service is not available yet.

Can GPT-4 Assist In Verification?

As stated by OpenAI, GPT-4 has many known and possibly unknown limitations. It tends to "hallucinate", and provide "adversarial prompts". Therefore, a task as critical as fact-checking cannot be solely dependent on GPT-4, as of now. When we asked GPT-4 about a misleading claim, at first it denied verifying anything "without access to real-time information". But after multiple prompts, on slightly changing the input, GPT-4 gave in to the misleading claim.

GPT-4 Output (1)

While GPT-4 did not confirm the claim in the first attempt, it provided a misleading response after a few different prompts.

Interestingly, when we again tried to see the output for the previous misleading input, it changed the output and once again denied confirming that the given statement was said by Raghuram Rajan.

Usage Cap Keeps Varying

ChatGPT Plus subscription comes with a usage cap. When we first logged in, it had a cap of 100 messages every 4 hours. At present, the cap is 25 messages every 3 hours, which can be adjusted according to demand.

In our findings, we concluded that the claims made by Open AI's research that "GPT-4 generally lacks knowledge of events that have occurred after September 2021", and "does not learn from its experience," stand true. GPT-4 can also be confidently wrong in its predictions and may overlook certain obvious evidence. While GPT-4 is capable of providing better reasoning and concise answers, as OpenAI claims, its ChatGPT Plus version, at present still has quite some limitations.