By Hazel Gandhi

Ratan Tata on Thursday took to social media to call out a deepfake video created with artificial intelligence (AI) voice cloning technology where he is supposedly seen promoting an investment opportunity, becoming the latest high profile name to warn about the menace of deepfakes.

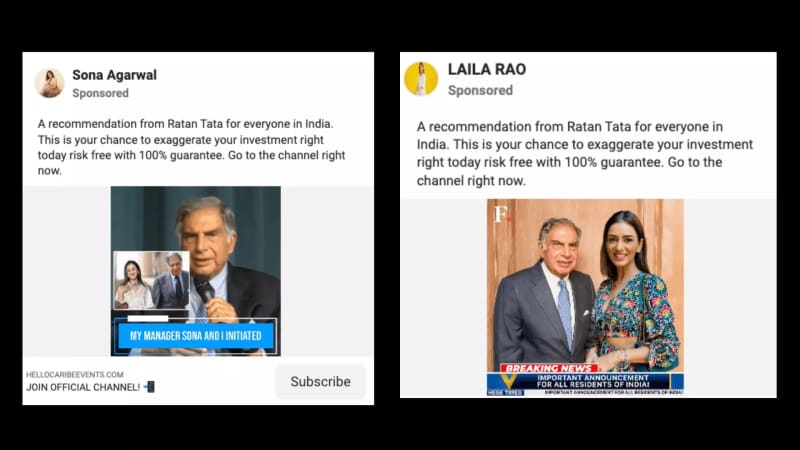

The fake ad on Facebook purports to show the legendary industrialist endorsing an investment opportunity by a woman supposedly named Sona Agarwal.

However, Sona Agarwal is a fictitious persona. We also found fake ads on Facebook using the same MO where Tata is seen endorsing one Laila Rao.

BOOM has earlier written several articles showing how Meta-owned Facebook is replete with fraud ads made with AI voice cloning tools impersonating the voices of popular Indian celebrities. These scams involve creating fake identities such as Laila Rao and Suraj Sharma. Sona Agarwal is the latest fake alias to this list. The visuals of the men and women used in these ads are of real people whose photographs have been stolen from their social media accounts.

The first video purportedly shows Tata promoting the scheme by saying that his manager, Sona Agarwal, is handling it.

"I get millions of messages every day asking for my help. It is physically impossible but I can't be indifferent. To address poverty in India, my manager Sona and I initiated a project. By following Sona's instructions, you can multiply 10,000 Rs, ensuring a solution to the critical poverty situation in India..."

The second video shares a similar message, but this time, Tata can be heard endorsing the investment scheme by another individual, Laila Rao. "Attention: I announce the start of a new investment project for all residents of India. I personally support this project which guarantees that any resident of India can multiply their money without any risks. I appoint Laila Rao as the head of this project..."

The two videos have been shared by the accounts of Sona Agarwal and Laila Rao and are circulating as sponsored posts or ads on Facebook. Below are the screenshots of the ads:

Also Read:How Unemployed Tech-Savvy Youth Ran The Infamous Mahadev Betting App

FACT CHECK

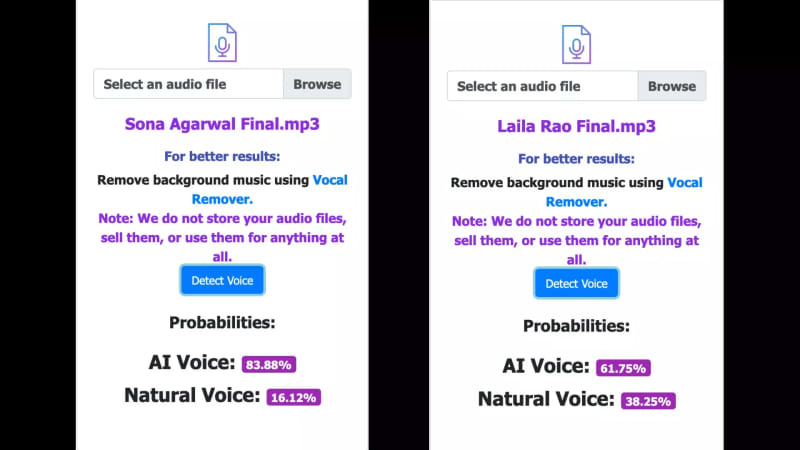

BOOM found that the videos are fake and have been created using AI voice cloning tools. Both the videos have been overlaid with a fake voiceover of Ratan Tata.

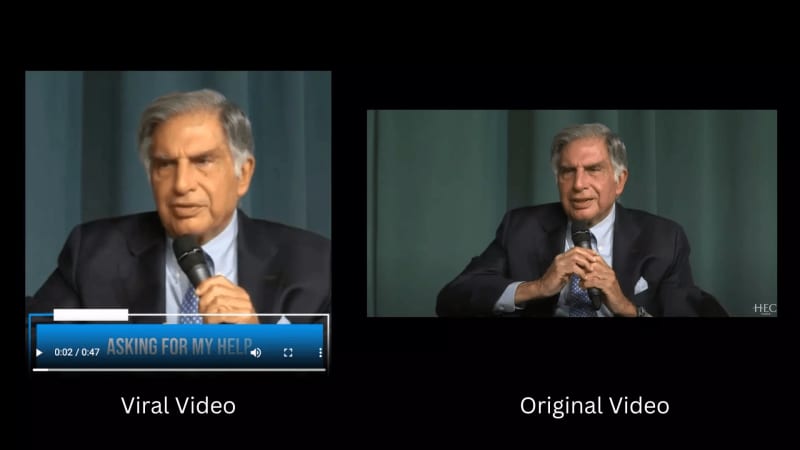

A reverse image search of some key frames from the viral video of Sona Agarwal led us to a video from June 4, 2015 shared by the official account of HEC, a business school in Paris. The video was titled 'Ratan N. Tata receives Honoris Causa degree at HEC Paris' and carried visuals similar to the viral video. The clip showed Tata receiving the honoris causa degree at HEC, speaking about leadership and management, and interacting with the audience during a Q&A session.

We watched the entire clip and did not find Tata speaking about any investment scheme by Sona Agarwal that would help people get rich quickly.

Below is a comparison between the viral video and the original video.

We also found that Ratan Tata had put out a clarification on his verified Instagram account regarding the Sona Agarwal video, where he called this ad fake.

We also ran both the audio samples of the ads through a tool called AI Voice Detector, which estimated that the voice in the Sona Agarwal video was 83% AI-generated, and the one in the Laila Rao video was 61% AI-generated.

We previously reported on the Laila Rao scam that used the face of actor Smriti Khanna to lure women into false investment schemes and dupe thousands of rupees from them. BOOM has also debunked other videos that showed Madhya Pradesh CM candidates Shivraj Singh Chouhan and Kamal Nath that were created using fake voiceovers likely made with the help of AI voice cloning tools. During the same time, BOOM debunked other videos that included a fake voiceover of Amitabh Bachchan, likely created using AI, from popular game show Kaun Banega Crorepati.

Also Read:Video Of Muslim Students Learning Sanskrit In Kerala Viral With False Claim