By Karen Rebelo

Fake ads using artificial intelligence voice cloning technology are being promoted on Meta’s social media platforms such as Facebook, Instagram and messaging app Messenger to its users in India.

Decode found numerous such fake ads peddling everything from get-rich quick schemes, betting apps, trading platforms to diabetes drugs, in the Meta Ad Library - a publicly accessible database to study political ads that was created in the aftermath of the Cambridge Analytica scandal.

We found 150 such URLs, however, restrictions around the visibility of these ads and repetition of identical content make it difficult to quantify as to how many such unique ads are floating on these platforms.

These fake ads have been created with AI voice clones that impersonate the voices of well known personalities in India. We found videos with voice clones of Shah Rukh Khan, Virat Kohli, Mukesh Ambani, Ratan Tata, Narayana Murthy, Akshay Kumar and Sadhguru among others.

The synthetic voice-overs have been overlaid onto real videos of these individuals. Although the lip-sync is off in many of these videos, they provide a terrifying preview of a synthetic world that awaits us.

Decode also found multiple fake profiles and pages have been set up to spend money on promoting these ads on Meta’s platforms boosting their visibility to its users in India.

We also found fake ads impersonating the voices of popular Hindi news journalists such as Ravish Kumar and others to peddle a diabetes drug. Kumar rubbished the video on his X account.

Artificial Intelligence voice clones in Hindi are disconcerting as they show the technology has leapfrogged in making convincing voice clones in non-English languages.

Fact-checkers fear the upcoming general election in 2024 could see a storm of AI-based misinformation, particularly voice clones.

“AI voice clones pose a greater risk than AI images or videos because of the advanced and sophisticated technology,” Morocco-based Abdellah Azzouzi, founder ofAI Voice Detector, told Decode.

“In the early stages, I recognised the potential misuse of AI voices for scams and misinformation,” Azzouzi, who is a software engineer by training, said.

“Differentiating between a real voice and a cloned voice is challenging, as AI voices can now sound very convincing. While AI images may not yet be entirely realistic or logical, they are evolving. AI videos are also a concern, especially when they include cloned voices in the audio,” he added.

Get-Rich-Quick Schemes Powered By AI

Most of the fake ads we found were for get-rich-quick schemes.

The fraud involves pulling off two convincing cons. The first is of a wealthy and good looking fund manager living a jet-setting life.

Decode found fake identities such as Laila Rao, Suraj Sharma, Amir Khan, Priya Kaur and Sona Agarwal and numerous Facebook profiles and pages in those names and others that are a part of the scam.

These fake personas were created by stealing photos and videos of real social media influencers without their knowledge.

For instance, the fake identities of Laila Rao and Priya Kaur have been created by misusing photos of fashion and beauty influencer Smriti Khanna.

Similarly, another fake persona Suraj Sharma has been created by passing off photos of Dubai based social media influencer Arslan Aslam.

When AI Puts Words In Their Mouths

The second part of the con involves cloning the voice of a popular celebrity in India and overlaying the synthetic audio onto real videos of them so that it looks and sounds like that the celebrity is endorsing the above fake fund managers.

The cloned voices ask people to join Telegram groups or channels managed by Laila Rao, Suraj Sharma, Amir Khan etc.

Telegram - a messaging app like WhatsApp is virtually unregulated in India making it a preferred medium for carrying out such frauds. Like the maze of fake Facebook profiles there are also several Telegram groups and channels that are a part of the scam.

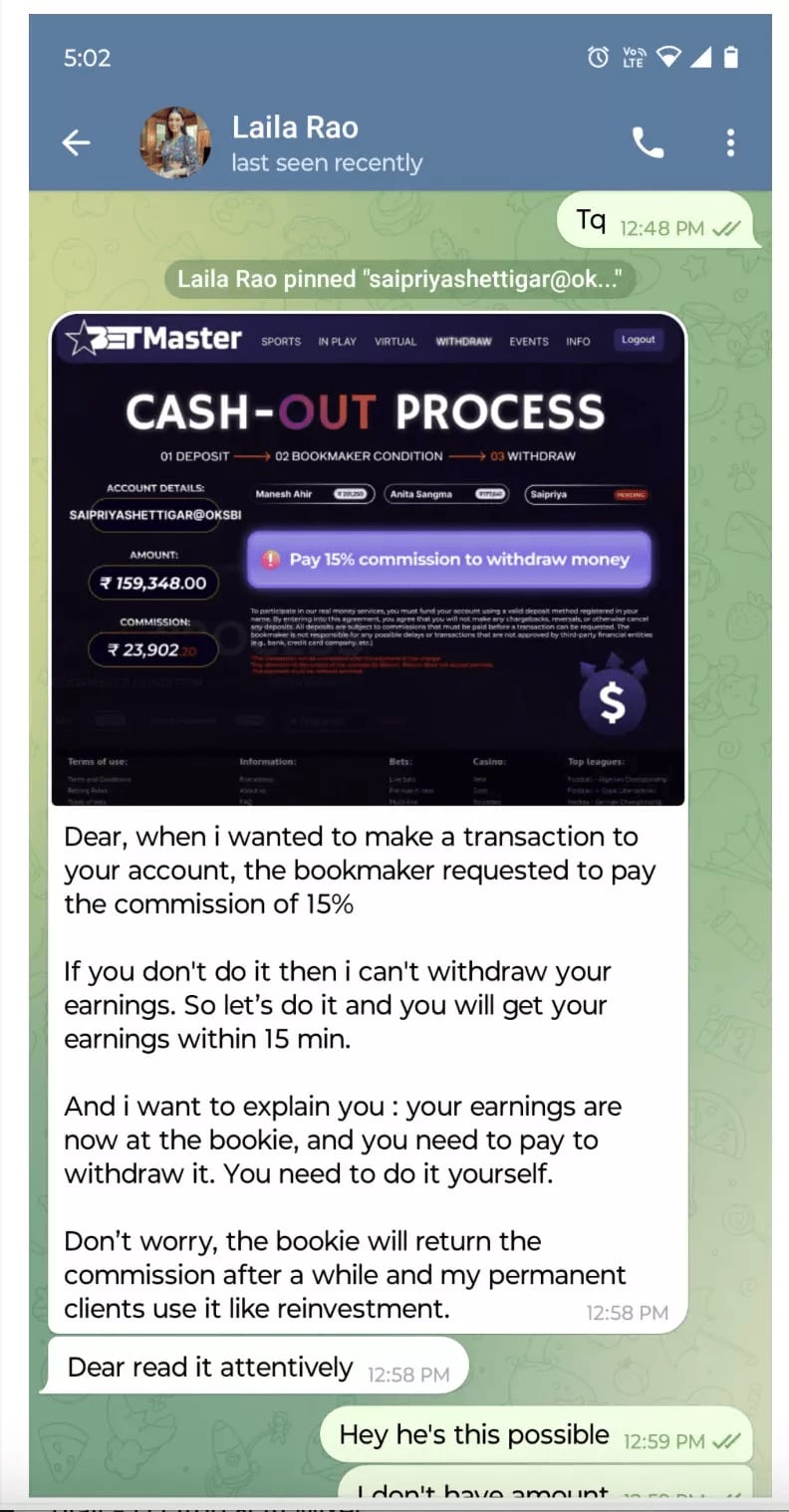

Once an unsuspecting person joins the Telegram group they are bombarded with fake screenshots of rapidly growing account balances and fake testimonies of others who have multiplied their investments.

A screenshot obtained from a consumer complaint forum shows how “Laila Rao” kept asking for more money from a victim after receiving an initial investment, by falsely claiming a 15% commission had to be paid to a bookmaker to release the funds.

Laila Rao Telegram channel

The perpetrators stop texting and vanish once they manage to extract enough money.

Decode had earlier spoken to several women who got duped by the Laila Rao fraud. Read about their experiences here.

“...This phase of a new type of cyber crime assisted by AI is emerging. So what we are seeing here is deepfake whether it is audio or whether it is video they have started coming up, Muktesh Chander, Special Monitor for cybercrime and artificial Intelligence at the National Human Rights Commission, said.

“So far what we were seeing in cyber crime is 80-90% of that is Jamtara type gang who don’t have any type of sophisticated technology. They only rely on social engineering and on greed or fear or gullibility of people,” the retired Indian Police Service official said.

Fraud Betting Apps and Fake AI Trading Platforms

Voice cloning is also being used to make fake ads promoting fake crypto and fake AI trading platforms.

Decode found several videos which contained voice clones of Infosys co-founder Narayana Murthy where it appears he is speaking about stock markets and AI while promising investors sky-high returns while talking about a tool called Quantum AI.

We also found videos with voice clones of actor Akshay Kumar advertising a fake gambling app called Aviator India.

Muktesh Chander said platforms have a responsibility when it comes to curbing deepfakes.

“Right now they are checking if there is a copyright or not. You upload a song…like I love the flute…I play the flute and upload a song (to YouTube). Immediately, I get a copyright notice.

"Why don’t they have an algorithm for detecting deepfakes?” he questioned.

Chander said technology to detect, tweaking existing laws, responsibility of platforms and public awareness is required to tackle deepfakes.

Decode has reached out to Meta for a comment. The article will be updated if we get a reply.