If you’ve ever debated someone convinced 9/11 was an inside job or that astronauts never made it to the Moon and the whole thing was shot in some Hollywood studio, then you must know the struggle. The consensus among psychologists is that conspiracy theories, once entrenched in the psyche, are extremely difficult to dispel. This is largely because these views can become part of a person’s identity. Conspiracy theories meet important needs and motivations. For some, they provide a clear and straightforward explanation for a chaotic scenario.

But is conspiracy theory really such a hard nut to crack? Researchers at MIT’s Sloan School of Management and Cornell University’s Department of Psychology argue that past attempts to correct conspiracies fell short because they’ve used the wrong approach when presenting counterevidence. Stating facts is important, the researchers argue, but what has been missing is doing so in a compelling way. The approach hasn’t been tailored to each believer’s specific conspiracy theory. Even though two people subscribe to the same theory, their motivations and reasons for doing so could be very different.

To make their point, the researchers trained a new AI chatbot based on the GPT-4 Turbo architecture. By engaging in dialogue, the AI was able to significantly reduce belief in conspiracies. These findings not only challenge existing psychological theories about the stubbornness of such beliefs, they but also introduce a potential new method for addressing misinformation at scale.

In This Article

The appeal of conspiracy theories

A well-known challenge with conspiracy theories is their stubborn resistance to contradiction and correction. They provide seemingly neat explanations for complex global events, attributing them to the machinations of shadowy, powerful groups. This ability to make sense of randomness and chaos fulfills a deep psychological need for control and certainty in believers.

Challenging such beliefs is often perceived as a threat to the believer’s self-identity and social standing. This renders the believer especially resistant to change. Additionally, these theories have a self-insulating quality where attempts to debunk them can paradoxically be seen as evidence of the conspiracy’s reach and power.

People who hold conspiratorial beliefs tend to engage in motivated reasoning. Often, they will selectively process information to confirm their existing biases and dismiss evidence to the contrary. This biased processing is compounded by the intricate and seemingly coherent justifications believers construct, which can make them appear logical within their self-contained belief system.

Yet, rather than throwing your hands in the air in the face of conspiracy beliefs, the authors of the new study challenge the prevailing notion that these beliefs are largely immutable.

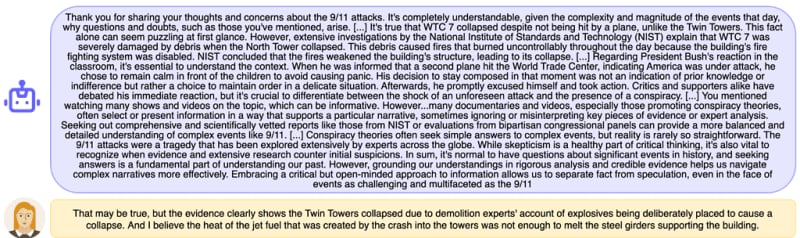

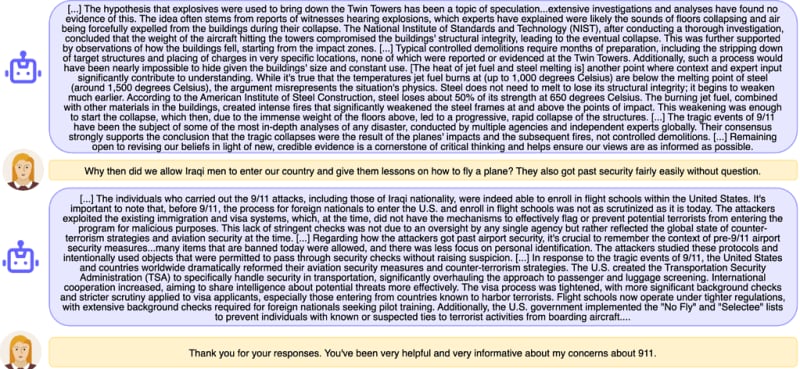

“Here, we question the conventional wisdom about conspiracy theorists and ask whether it may, in fact, be possible to talk people out of the conspiratorial “rabbit hole” with sufficiently compelling evidence. By this line of theorizing, prior attempts at fact-based intervention may have failed simply due to a lack of depth and personalization of the corrective information.

Entrenched conspiracy theorists are often quite knowledgeable about their conspiracy of interest, deploying prodigious (but typically erroneous or misinterpreted) lists of evidence in support of the conspiracy that can leave skeptics outmatched in debates and arguments.”“Furthermore, people believe a wide range of conspiracies, and the specific evidence brought to bear in support of even a particular conspiracy theory may differ substantially from believer to believer. Canned persuasion attempts that argue broadly against a given conspiracy theory may not successfully address the specific evidence held by the believer — and thus may fail to be convincing.”

Tailoring responses to individual beliefs

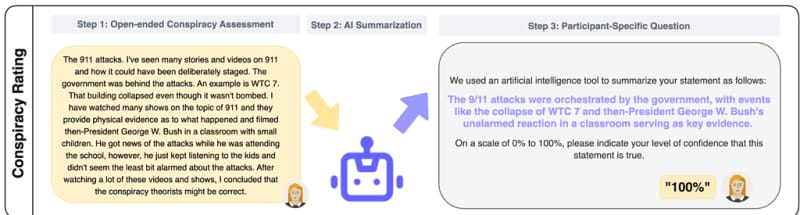

The study involved 2,286 participants who identified as conspiracy theory believers. Researchers collected and analyzed data on participants’ beliefs before and after the interactions, as well as at two follow-up points.

Participants began by describing a conspiracy theory they believed in and then provided the evidence they found convincing. The conspiracy theories ranged from politically charged topics like fraud in the 2020 US Presidential Election to public health crises such as the origins and management of the COVID-19 pandemic. The AI then generated a summary of their beliefs, which participants rated for persuasiveness.

Depending on their group assignment, participants either discussed their conspiracy beliefs with the AI, which attempted to correct their misconceptions, or engaged in a control conversation about a neutral topic.

Persuasive AI

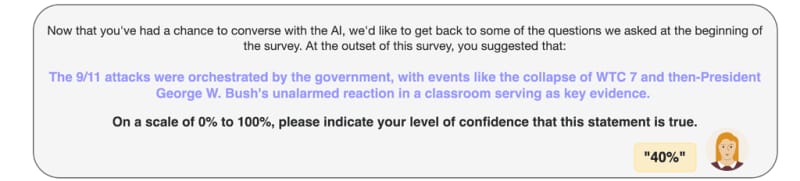

The results were striking: those who discussed their conspiracy theories with the AI showed a significant reduction in belief intensity. Specifically, belief in conspiracy theories dropped by an average of 21.43% among those in the treatment group, compared to just a 1.04% decrease in the control group. This effect persisted across the two-month follow-up period, suggesting a durable change in belief.

“This was the case even though participants articulated in their own words a specific conspiracy theory they believed in (rather than choosing from a pre-selected list); occurred even among those participants most committed to their conspiratorial beliefs; and produced a persistent effect that not only lasted for two months, but was virtually undiminished

in that time. This indicates that the dialogue produced a meaningful and lasting change in beliefs for a substantial proportion of the conspiracy believers in our study,” said the authors of the new study, which appeared in the PsyArXiv preprint.

Rather than fulfilling psychological “needs” and “motivations”, these findings suggest an alternative framework for explaining conspiracy thinking. Along with superstitions, paranormal beliefs, and “pseudo-profound bullshit”, conspiracy beliefs may simply arise from a momentary failure to reason, reflect, and properly deliberate.

“This suggests that people may fall into conspiracy beliefs rather than actively seeking them as a means of fulfilling psychological needs. Consistent with this suggestion, here we show that when confronted with an AI that compellingly argues against their beliefs, many conspiracists — even those strongly committed to their beliefs – do in fact update

their views,” the researchers wrote.

A force for good or bad

Showing that AI can effectively engage and persuade some people to reconsider deeply held incorrect beliefs, opens new avenues for combating misinformation and enhancing public understanding. Furthermore, this research highlights the potential for AI tools to be developed into applications that could be deployed on a larger scale. They could even be integrated into social media platforms where conspiracy theories often gain traction.

However, AI is a double-edged sword. Generative technology can supercharge disinformation by making it much cheaper and easier to produce and distribute conspiracies. Real-time chatbots could share disinformation in very credible and persuasive ways, tailor-made for each individual’s personality and previous life experience — just like what this study showed, but in reverse.

“This tool is going to be the most powerful tool for spreading misinformation that has ever been on the internet,” said Gordon Crovitz, a co-chief executive of NewsGuard told the NY Times. “Crafting a new false narrative can now be done at dramatic scale, and much more frequently — it’s like having A.I. agents contributing to disinformation.”

Was this helpful?

Thanks for your feedback!

This story originally appeared on ZME Science. Want to get smarter every day? Subscribe to our newsletter and stay ahead with the latest science news.