Since summer 2023, you can prevent the crawlers from the AI company Open AI from reading your website and making it part of the artificial intelligence ChatGPT, which can be found at https://chat.openai.com and via Microsoft at www.chat.bing.com as well as in a variety of Microsoft products.

Advantages of the crawler ban: With protection from AI crawlers, the text and images on your website will no longer be used to train the ChatGPT artificial intelligence in future.

However, your content will not be subsequently removed from ChatGPT’s knowledge base. And the AI crawlers of other providers will not adhere to the ban for the time being. Open AI has so far been the first and only company to commit to complying with the crawler ban.

How it works: There is a classic method of blocking crawlers: Save a simple text file with the name robots.txt in the root directory of your web space. In robots.txt, specify what you want to block on your website. For example, write

User-agent: GPTBot Disallow: /

in the file, the scanning ban only applies to the crawler from Open AI (GPTBot). It is denied access to the entire website (/). However, you can also allow the crawler to access certain folders on your website and deny it access to others. This then looks like this:

User-agent: GPTBot Allow: /Folder-1/ Disallow: /Folder-2/

Replace “Folder-1” and “Folder-2” with the names of the folders that you want to protect or allow. If all crawlers are to be blocked, the robots.txt looks like this:

User-agent: Disallow: / *

Information on robots.txt can be found at Open AI and at Google.

Important: It is generally assumed that crawlers follow the instructions in robots.txt. Technically, however, the file offers no protection. A hostile programmer can instruct his crawlers to ignore the robots.txt and search the contents of your website anyway.

IDG

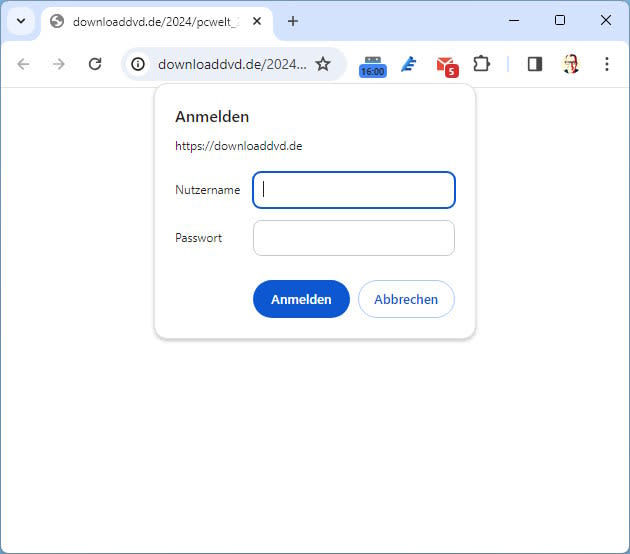

Much more secure: If you want to protect particularly valuable content from AI and other crawlers, you can also password-protect these parts of your website and only pass on the access data to authorized persons. The disadvantage:

This part of the website is no longer accessible to the public. You control this access protection via the two files .htpasswd and .htaccess. The .htpasswd file contains the password in encrypted form as well as the user name.

Further reading: 9 free AI tools that run locally on your PC

The .htaccess file defines which folders or files are to be protected with the password and where the .htpasswd file is located on the server. You can find an explanation of the content of the files here.

This article was translated from German to English and originally appeared on pcwelt.de.