By Nidhi Jacob

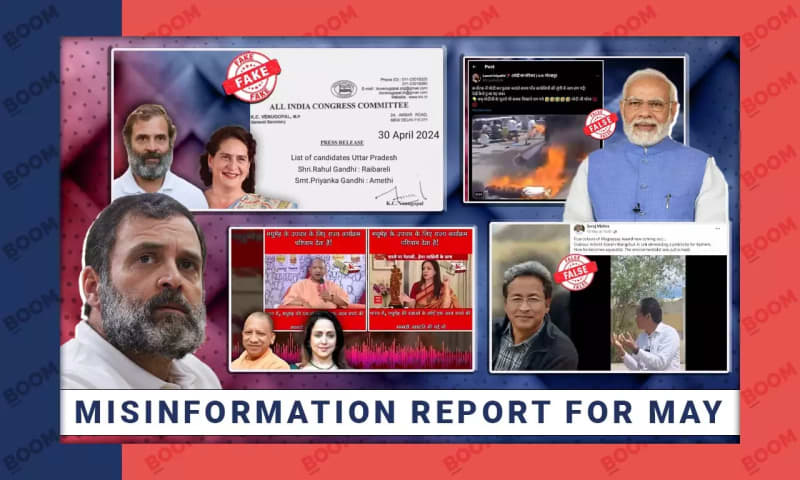

In May, BOOM published 128 fact-checks in English, Hindi and Bangla. We observed the highest occurrence of AI-generated fake news this year in May. From news channels predicting exit poll wins for political parties to celebrities promoting diabetes pills, we published 12 fact-checks involving deepfakes, artificial intelligence-generated images, and voice clones.

Out of the 128 fact-checks published by BOOM, the Lok Sabha elections 2024, held between April 19 and June 1, were the leading topic of misinformation last month, resulting in 101 fact-checks.

Additionally, the Muslim community was the primary target of mis/disinformation, with 10 fact-checks.

Theme Assessment

AI Generated Mis/Disinformation

BOOM debunked 12 AI-generated pieces of misinformation, including 6 voice clones, 4 deepfakes, and 2 images. However, of these only 2 were related to the Lok Sabha elections.

A video purporting to show news anchor Sudhir Chaudhary predicting a win for Aam Aadmi Party (AAP) candidate from West Delhi, Mahabal Mishra was viral online.

BOOM found that the video was a deepfake, created using a voice clone of Chaudhary. It featured fake graphics of an exit poll predicting Mishra's win, despite Aaj Tak or the India Today Group not releasing any exit polls for the Lok Sabha elections. Additionally, we analyzed the audio clip of Chaudhary's video using two deepfake detection tools: Itisaar, developed by IIT Jodhpur, and Contrails.AI. Itisaar identified the audio as a fake "generative AI voice swap," while Contrails classified the viral video as an "AI Audio Spoof."

Similarly, a video claiming to depict News24's Manak Gupta predicting a victory for the Aam AAP’s candidate Mahabal Mishra from West Delhi in the Lok Sabha elections was fake. The video was created using an AI-generated voice clone of Gupta. We found that both Manak Gupta and the verified handle of Today's Chanakya exit poll on X clarified that the video was a deepfake.

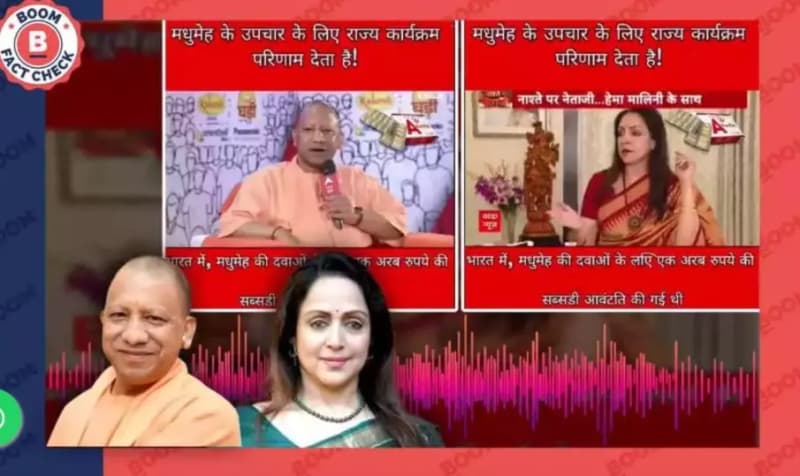

Apart from the election-related AI fake news, we came across four fact-checks that contained AI generated voice clones of celebrities promoting fake diabetes cure.

For instance, sponsored videos on Facebook falsely show Yogi Adityanath, Hema Malini, Akshay Kumar, and journalist Sudhir Chaudhary promoting a diabetes cure. The videos, which includes an external link for pricing, was created using AI-generated voice clones over cropped original interviews. The misleading content was shared by accounts like Good lobs, Lenin Grib, Dr GR Badlani and others. One link redirected to the website of diabetologist Dr. GR Badlani, who refuted the claims and clarified he does not sell such medicines. "This is wrong. I have a diabetes center but I do not sell such medicines. For the last few days, I have been getting calls from people asking me for medicines to cure diabetes." Dr. Badlani told BOOM. Despite receiving calls about the ads, he couldn't find them on Facebook to report to the police.

Lok Sabha elections 2024

BOOM published 101 fact-checks related to the 2024 Lok Sabha elections in May. Claims in 74 out of the 101 fact-checks were spread with the intention of spreading fake and sensationalist content. For example, a fake letter circulated widely on social media claimed that Prashant Kishor, chief of the Jan Suraaj organization, was appointed as the national spokesperson of the Bharatiya Janata Party (BJP). The letter falsely stated, "BJP National President Shri Jagat Prakash Nadda has appointed Shri Prashant Kishor as the National Chief Spokesperson of BJP. This appointment comes into immediate effect." However, Kishor's office confirmed to BOOM that the letter was fake, and Jan Suraaj also denied the claim on X.

In another instance, the official X accounts of the BJP in West Bengal and Tripura posted a photo of a metro on an elevated line, misleadingly claiming it showcased the development of metro railway services achieved by the BJP-led government in India. However, we found that the photo was actually from Jurong East MRT station in Singapore. The image was originally published on a website run by the Singapore government to promote the country's transportation convenience to the global community.

Furthermore, 25 fact-checks were related to smear campaigns against politicians from various parties, with Rahul Gandhi being the primary target of mis/disinformation.

Smear campaigns targeting Rahul Gandhi included an offensive morphed cartoon of him on the cover page of TIME magazine and a doctored video of him allegedly saying that the Congress party wants to destroy Indian democracy and the Constitution, among other misleading and false content.

BOOM also debunked communal claims related to the elections. In his speech, PM Modi accused the Congress party's manifesto of making communal remarks, claiming that if Congress assumed power, they would conduct a comprehensive survey of all assets in the country, including the gold owned by women, with the intention of redistributing assets to Muslims. BOOM found these claims to be misleading. The manifesto does not explicitly mention Muslims or "wealth redistribution." Instead, it suggests the need for policy assessment without outlining specific wealth redistribution plans.

Non-election related Communal claims

We published around six non-election related fact-checks involving communal claims. One such case involved a disturbing video of a gym trainer assaulting a woman inside a gymnasium in West Bengal's Ranaghat. The video was circulated with captions falsely claiming that a Muslim trainer attacked Hindu girls. Additionally, posts on X featured a screengrab of actor Kay Kay Menon from the film Shaurya, referencing Menon's dialogue where he calls infiltrators pests.

BOOM reached out to the Superintendent of Police, Ranaghat Police District, who confirmed that there was no communal angle to the incident and stated that both the victim and the perpetrator are from the same community.

In anotherfact-check, a video of a crowd tying a cow to a jeep went viral on social media, with communal claims that people of the Muslim community were openly slaughtering cows in Karnataka. BOOM investigated and found this claim to be false. The viral video was actually from Wayanad in Kerala, taken in February 2024, when locals, frustrated with wild animal attacks, tied a dead cow to a forest department vehicle and blocked the road in protest.